News

IMI-BAS and ICMS-Sofia Launched the Atanasoff Memorial Lecture Series Celebrating John Vincent Atanasoff

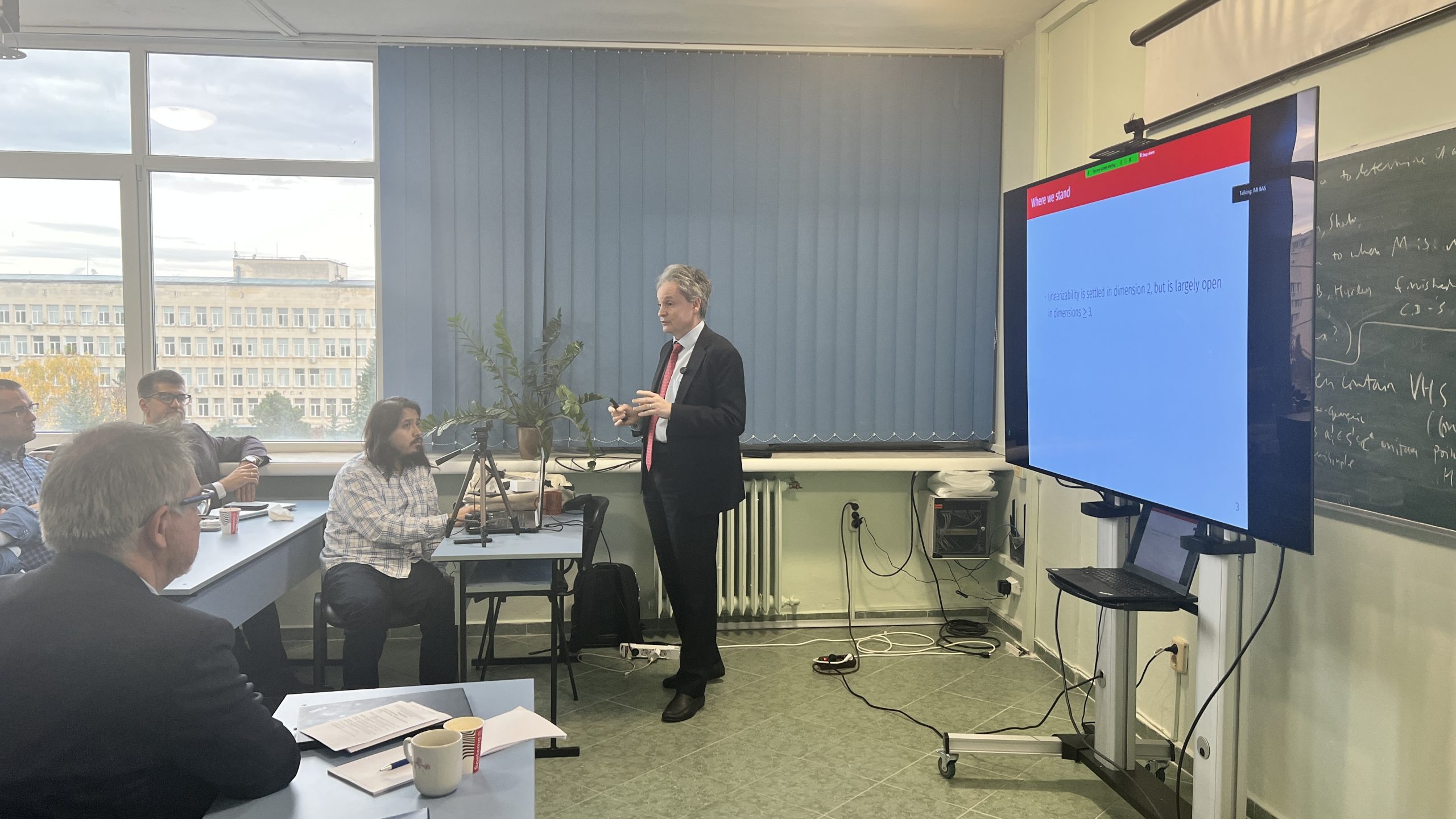

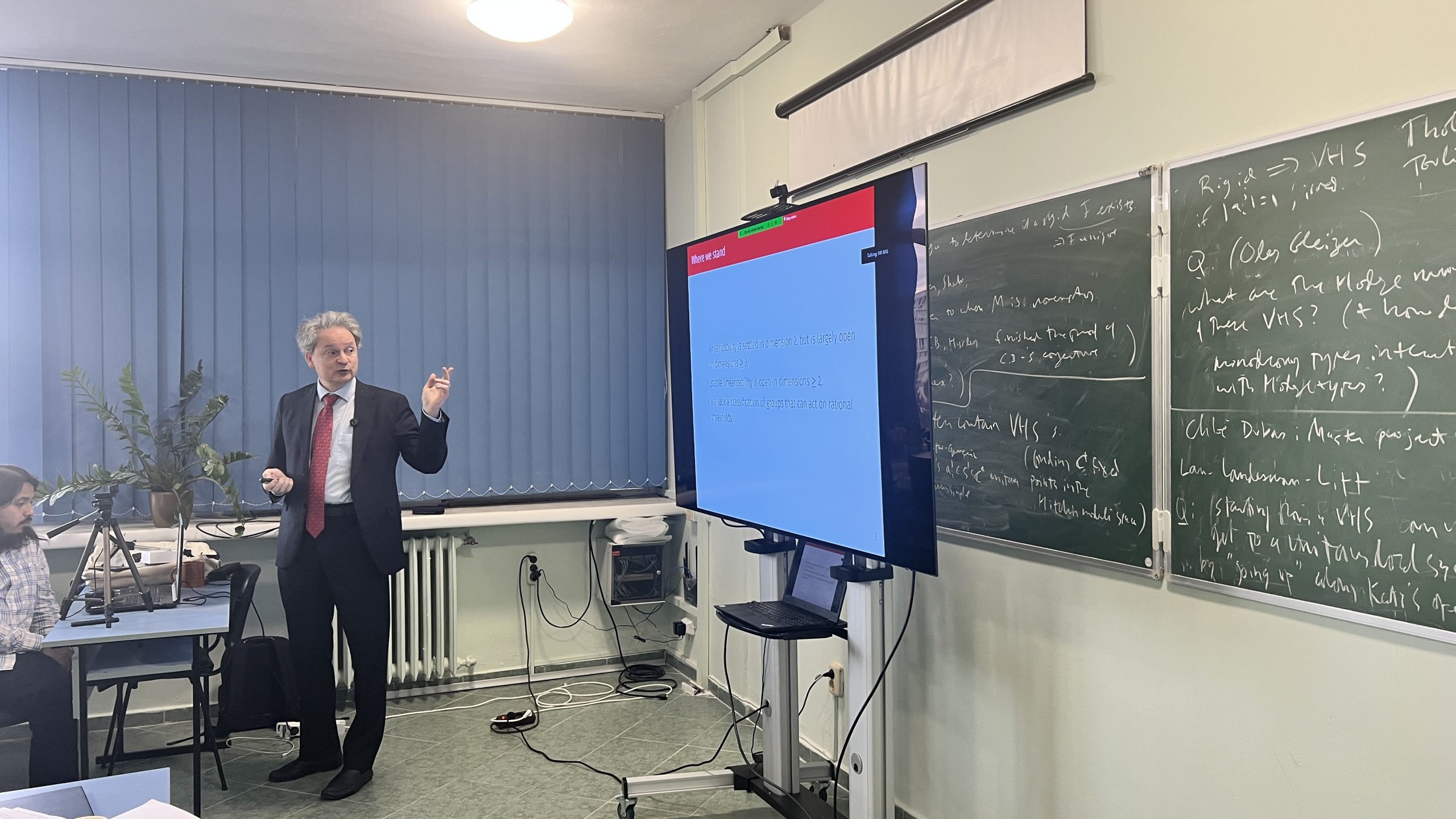

On November 10, 2025, the Institute of Mathematics and Informatics of the Bulgarian Academy of Sciences hostеd the event Atanasoff Memorial Day – a new initiative of the Institute of Mathematics and Informatics at the Bulgarian Academy of Sciences and the International Center for Mathematical Sciences (ICMS-Sofia) at IMI-BAS.

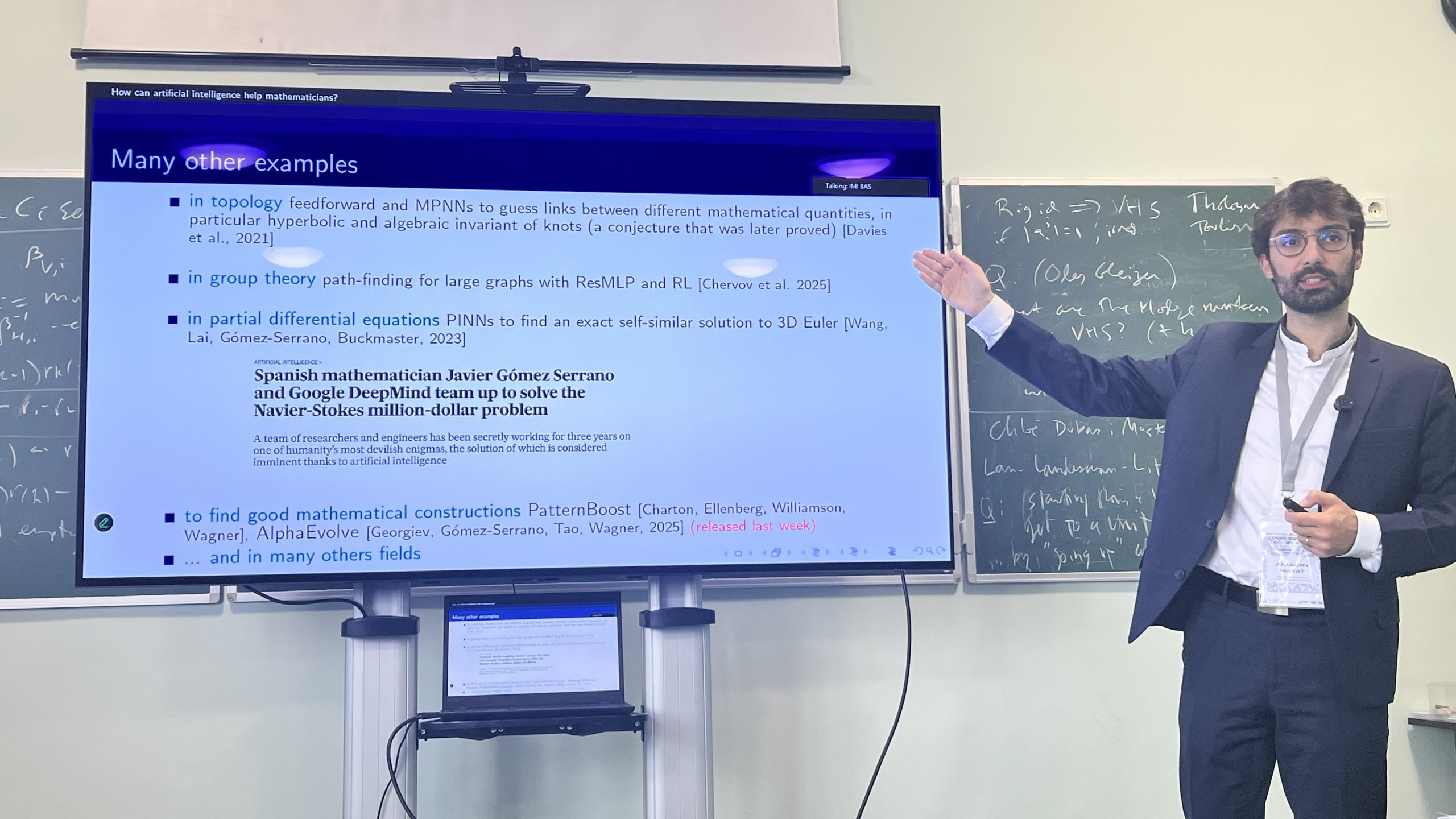

The organizers’ ambition is to hold the Atanasoff Memorial Lecture Series every year, thus commemorating the life of John Vincent Atanasoff (1903–1995) – a computer pioneer, inventor of a model of an electronic digital computer and renowned as the “Bulgarian father of the electronic computer”. It aims to celebrate the intersection between mathematics, computation, and the sciences of complexity — the very domains that unite topology, geometry, and machine learning in the 21st century.

Each year, an internationally distinguished scientist is invited as the Atanasoff Lecturer to deliver a keynote lecture highlighting frontier ideas where mathematical structure meets computational innovation. The series provides an open platform for dialogue across fields: pure mathematics, physics, data science, and artificial intelligence. It also serves as a tribute to the scientific imagination of John Vincent Atanasoff, whose work laid the foundations of electronic computation.

The inaugural edition of the series took place at the International Center for Mathematical Sciences (ICMS-Sofia) at IMI-BAS, on November 10, 2025. The program includes topics from topology to machine learning, control, and data-driven modelling of complex systems.

This year, the keynote lecturer was Amaury Hayat, French mathematician and applied scientist, Professor at École des Ponts–Institut Polytechnique de Paris, working on control and stabilization of PDEs and on applications of artificial intelligence to mathematics.

Among other distinguished mathematicians who attended the first Atanasoff Memorial Day were:

- Raphaël Douady, French mathematician and economist, PhD (1982, Paris VII) in Hamiltonian dynamics, former Frey Chair of Quantitative Finance at Stony Brook (SUNY) and Academic Director at LabEx ReFi, Co-founder and Research Director of Riskdata, with decades of work in chaos theory, systemic-risk modelling, polymodel theory and machine-learning methods in finance.

- Carlos Simpson, American algebraic geometer, PhD form Harvard (1987) under Wilfried Schmid on Systems of Hodge Bundles and Uniformization, Research Director at CNRS, Université Côte d’Azur. His work includes non-abelian Hodge theory, higher categories, moduli spaces and computer-aided proof verification.

- Yuri Tschinkel, Russian-German-American algebraic geometer, PhD from MIT (1992), Junior Fellow at Harvard Society of Fellows, Gauss Chair at Göttingen, Chair at Courant (NYU), and Director at the Simons Foundation. His research is in the field of rational points, birational geometry and the arithmetic of high-dimensional varieties.

- Phillip A. Griffiths, American mathematician, Institute for Advanced Study (IAS Princeton). Renowned for foundational work in Hodge theory, algebraic geometry and differential geometry, as well as for his leadership in shaping modern mathematical institutions and research communities. For his outstanding contributions to the development of modern mathematics, for his exceptional role in fostering international collaborations with IMI–BAS, and for his efforts in strengthening the Institute’s position as a leading international research centre, in 2025 Prof. Griffiths was awarded the Medal with Ribbon of IMI–BAS.

Prof. Phillip Griffiths was Awarded the Medal with Ribbon of IMI–BAS

According to a decision of the Scientific Council of the Institute of Mathematics and Informatics at the Bulgarian Academy of Sciences (IMI–BAS), taken on July 11, 2025, the Medal with Ribbon of IMI–BAS was awarded to Prof. Phillip Griffiths, Institute for Advanced Studies, USA.

According to a decision of the Scientific Council of the Institute of Mathematics and Informatics at the Bulgarian Academy of Sciences (IMI–BAS), taken on July 11, 2025, the Medal with Ribbon of IMI–BAS was awarded to Prof. Phillip Griffiths, Institute for Advanced Studies, USA.

The distinction is conferred for his outstanding contributions to the development of modern mathematics, for his exceptional role in fostering international collaborations with IMI–BAS, and for his great efforts in establishing the Institute’s position as a leading international research center.

The medal was presented online by Prof. Velichka Milousheva, Deputy Director of IMI–BAS and of the International Center for Mathematical Sciences (ICMS–Sofia).

Prof. Phillip Griffiths is one of the most influential mathematicians of the last half-century with fundamental contributions that have reshaped modern geometry. Renowned for his work in algebraic and differential geometry, Griffiths has made groundbreaking advances in areas ranging from Hodge theory to partial differential equations. His ideas and results have not only solved long-standing problems but also opened entirely new avenues of research.

In recognition of his profound impact, he has received numerous honors, including Chern Medal (2014), Steele Prize (2014), Wolf Prize in Mathematics (2008), and he is a member of the U.S. National Academy of Sciences, the Russian Academy of Sciences and the Indian Academy of Sciences.

IMI will present three innovative projects at the International Forum “Advanced ICT Research and Innovation”

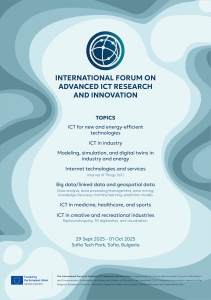

From September 29 to October 1, 2025, the Council on Science and Innovation in Information and Communication Technologies and the Bulgarian Academy of Sciences are organizing the International Forum “Advanced ICT Research and Innovation.” The event is held under Investment C2.I2 “Enhancing the Innovation Capacity of BAS in the Field of Green and Digital Technologies,” part of the National Recovery and Resilience Plan.

From September 29 to October 1, 2025, the Council on Science and Innovation in Information and Communication Technologies and the Bulgarian Academy of Sciences are organizing the International Forum “Advanced ICT Research and Innovation.” The event is held under Investment C2.I2 “Enhancing the Innovation Capacity of BAS in the Field of Green and Digital Technologies,” part of the National Recovery and Resilience Plan.

The Institute of Mathematics and Informatics at the Bulgarian Academy of Sciences will take an active part in the forum, presenting three projects funded under the Recovery and Resilience Mechanism:

- “Innovative Software Platform for Serious Educational Games with Creative Visualization for Building Competence and Responsible Management of Natural Resources – ProNature” with a team leader Assoc. Prof. Detelin Luchev, PhD (Department of Mathematical Linguistics);

- “Integrated Framework for Health Service Improvement via Analysis of Patient Reported Outcomes Data” with a team leader Assoc. Prof. Krasimira Ivanova, PhD (Department of Software Technologies and Information Systems), and

- “Models, Methods and Tools for Predicting the Technical and Perceived Quality of Service in Cyber-Physical Social Systems” led by Prof. Ivan Ganchev, PhD (Department of Information Modelling).

The forum will present scientific research and innovations with practical applications and technology transfer. Its aim is to foster cooperation between academia, industry, and the startup ecosystem, encouraging transformation towards sustainable and digital solutions. The event will provide a platform for exchanging ideas, presenting results, and building new partnerships in the field of green and digital technologies.

Key topics include:

- ICT for new and energy-efficient technologies;

- ICT in industry;

- Modelling, simulation, and digital twins in industry and energy;

- Internet technologies and services. Internet of Things (IoT);

- Big data/linked data and geospatial data – Data analysis, data processing/management, data mining; knowledge discovery; machine learning; prediction models;

- ICT in medicine, healthcare, and sports;

- ICT in creative and recreational industries. Rapid prototyping, 3D digitization, and visualization.

The program runs from September 29 to October 1, 2025, at Sofia Tech Park, The Venue Hall, ground floor of the Incubator building.

Registration form can be found here: https://docs.google.com/forms/d/e/1FAIpQLScC6v7V-a_TeoTEVHBT5lhwRJj-4yqg4d0JYNejJbOiPdKAeg/viewform?usp=header

Fifteenth International Conference Digital Presentation and Preservation of Cultural and Scientific Heritage – DiPP2025

From September 25 to 28, 2025, the Institute of Mathematics and Informatics at the Bulgarian Academy of Sciences, under the patronage of UNESCO and with the support of the National Scientific Fund of Bulgaria (contract КП-06-МНФ/2 of 06.06.2025) and the Municipality of Burgas, is organizing the Fifteenth International Conference Digital Presentation and Preservation of Cultural and Scientific Heritage – DiPP2025.

The conference seeks to highlight innovations, projects, and scholarly as well as applied research in the domains of digital documentation, archiving, representation, and preservation of both global and national tangible and intangible cultural and scientific heritage. Particular emphasis is placed on providing open access to digitized national cultural and scientific heritage, and on advancing sustainable policies for its long-term digital preservation and safeguarding. Keynote speakers invited to the forum include Dr. Giuliano Guffrida from the Vatican Apostolic Library, Prof. Dr. Ioannis Pratikakis from Democritus University of Thrace, and Prof. Dr. Marco Scarpa from the University of Messina, among others, who will present innovative projects and technological developments in the field of digital palaeography.

The distinguished scientific event DiPP2025 will be opened on September 25, 2025, at 12:30 PM at the Cultural Center Sea Casino in Burgas. This event supports Burgas’ candidature for European Capital of Culture 2032.

Co-organizers: Regional Academic Center of BAS – Burgas, Regional Historical Museum – Burgas, Burgas Free University, Regional Library Peyo Yavorov, Burgas, Burgas State University Prof. A. Zlatarov

Accompanying events:

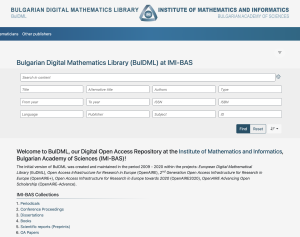

- The 16th National Information Day: Open Science, Open Data, Open Access, Bulgarian Open Science Cloud;

- 4th Information Day Research Infrastructure Services in the Humanities and Social Sciences, organized by the Institute of Mathematics and Informatics – BAS, presenting research outcomes of the project National Interdisciplinary Research E-Infrastructure for Resources and Technologies for the Bulgarian Language and Cultural Heritage, integrated within the European infrastructures CLARIN and DARIAH (CLADA-BG).

Further information about the conference is available on the DiPP2025 website: http://dipp2025.math.bas.bg/.

Research reports presented at the forum are published in the peer-reviewed Proceedings, indexed in Web of Science and Scopus (SJR), and accessible online at http://dipp.math.bas.bg

The International Conference Celebrating the Consortium IMSAC brings together mathematicians from four continents

From August 7 to 9, 2025, the Institute of Mathematics and Informatics at the Bulgarian Academy of Sciences (IMI–BAS) will host the International Conference Celebrating the Consortium IMSAC – an event that brings together distinguished mathematicians from around the world.

From August 7 to 9, 2025, the Institute of Mathematics and Informatics at the Bulgarian Academy of Sciences (IMI–BAS) will host the International Conference Celebrating the Consortium IMSAC – an event that brings together distinguished mathematicians from around the world.

The conference is jointly organized by the International Center for Mathematical Sciences – Sofia (ICMS-Sofia) at IMI–BAS and the Institute for the Mathematical Sciences of the Americas (IMSA) at the University of Miami.

The ICMS – Sofia is a partner in the Institute of the Mathematical Sciences of the Americas Consortium (IMSAC), which includes a group of universities from Latin America, the Caribbean, Europe, and USA. The purpose of this consortium is a collaboration between institutions in higher education and establishment of joint research activities. The Consortium was organized in 2020 as an idea of Ludmil Katzarkov with the goal to multiply the collaboration effect and obtain stronger mathematical results in the following directions: the creation of Theory of Atoms; Nonkaehler Hodge theory; Self-organized chaos. Some of the research directions in ICMS-Sofia come from this collaboration.

The Celebrating the Consortium IMSAC conference will take place in Hall 403 at the Institute of Mathematics and Informatics in Sofia. The programme starts with a talk by the Fields Medalist Caucher Birkar. Over the course of three days, leading researchers from the IMSAC network will present their latest results, discuss joint projects, and explore new directions in mathematics and its applications.

This international meeting is a testament to the strength of global academic partnerships and the growing role of ICMS-Sofia as a center for high-level mathematical research. The event reaffirms ICMS-Sofia’s mission to foster scientific excellence and international collaboration within the framework of IMSAC.

Administration

IMI PRIZE

IMI MATHEMATICS PRIZE

Please donate